I didn’t attend the W3C’s Do Not Track and Beyond Workshop last week, but I heard reports from several attendees that instead of looking forward, participants spent a lot of time looking backwards at last decade’s W3C web privacy standard, the Platform for Privacy Preferences (P3P). P3P is a computer-readable language for privacy policies. The idea was that websites would post their privacy policies in P3P format and web browsers would download them automatically and compare them with each user’s privacy settings. In the event that a privacy policy did not match the user’s settings, the browser could alert the user, block cookies, or take other actions automatically. Unlike the proposals for Do Not Track being discussed by the W3C, P3P offers a rich vocabulary with which websites can describe their privacy practices. The machine-readable code can then be parsed automatically to display a privacy “nutrition label” or icons that summarize a site’s privacy practices.

Having personally spent a good part of seven years working on the P3P 1.0 specification, I can’t help but perk up my ears whenever I hear P3P mentioned. I still believe that P3P was, and still is, a really good idea. In hindsight, there are all sorts of technical details that should have been worked out differently, but the key ideas remain as compelling today as they were when first discussed in the mid 1990s. Indeed, with increasing frequency I have discussion with people who are trying to invent a new privacy solution that actually looks an awful lot like P3P.

Sadly, the P3P standard is all but dead and practically useless to end users. While P3P functionality has been built into the Microsoft Internet Explorer (IE) web browsers for the past decade, today thousands of websites, including some of the web’s most popular sites, post bogus P3P “compact policies” that circumvent the default P3P-based cookie-blocking system in Internet Explorer. For example, Google transmits the following compact policy, which tricks IE into believing that Google’s privacy policy is consistent with the default IE privacy setting and therefore its cookies should not be blocked.

P3P:CP="This is not a P3P policy! See http://www.google.com/support/accounts/bin/answer.py?hl=en&answer=15165 for more info."

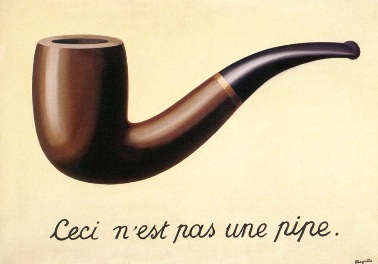

Google’s approach is both clever and (with apologies to Magritte) surreal. The website transmits the code that means, “I am about to send you a P3P compact policy.” And yet the content of the policy says “This is not a P3P policy!” Thus, to IE this is a P3P policy, and yet to a human reader it is not. As P3P is computer-readable code, not designed for human readers, I argue that it is a P3P policy, and a deceptive one at that. The issue got a flurry of media attention last February, and then was quickly forgotten. The United States Federal Trade Commission and any of the 50 state attorney generals (or even a privacy commissioner in one of the many countries that now has privacy commissioners to enforce privacy laws) could go after Google or one of the the thousands of other websites that have posted deceptive P3P policies. However, to date, no regulators have announced that they are investigating any website for a deceptive P3P policy. For their part, a number of companies and industry groups have said that circumventing IE’s privacy controls is an acceptable thing to do because they consider the P3P standard to be dead (even though Microsoft still makes active use of it in the latest version of their browser and W3C has not retired it).

Google’s approach is both clever and (with apologies to Magritte) surreal. The website transmits the code that means, “I am about to send you a P3P compact policy.” And yet the content of the policy says “This is not a P3P policy!” Thus, to IE this is a P3P policy, and yet to a human reader it is not. As P3P is computer-readable code, not designed for human readers, I argue that it is a P3P policy, and a deceptive one at that. The issue got a flurry of media attention last February, and then was quickly forgotten. The United States Federal Trade Commission and any of the 50 state attorney generals (or even a privacy commissioner in one of the many countries that now has privacy commissioners to enforce privacy laws) could go after Google or one of the the thousands of other websites that have posted deceptive P3P policies. However, to date, no regulators have announced that they are investigating any website for a deceptive P3P policy. For their part, a number of companies and industry groups have said that circumventing IE’s privacy controls is an acceptable thing to do because they consider the P3P standard to be dead (even though Microsoft still makes active use of it in the latest version of their browser and W3C has not retired it).

The problem with self-regulatory privacy standards seems to be that the industry considers them entirely optional, and no regulator has yet stepped in to say otherwise. Perhaps because no regulators have challenged those who contend that circumventing P3P is acceptable, some companies have already announced that they are going to bypass the Do Not Track controls in IE because they do not like Microsoft’s approach to default settings (see also my blog post about why I think the industry’s position on ignoring DNT in IE is wrong).

Until we see enforcement actions to back up voluntary privacy standards such as P3P and (perhaps someday) Do Not Track, users will not be able to rely on them. Incentives for adoption and mechanisms for enforcement are essential. We are unlikely to see widespread adoption of a privacy policy standard if we do not address the most significant barrier to adoption: lack of incentives. If a new protocol were built into web browsers, search engines, mobile application platforms, and other tools in a meaningful way such that there was an advantage to adopting the protocol, we would see wider adoption. However, in such a scenario, there would also be significant incentives for companies to game the system and misrepresent their policies, so enforcement would be critical. Incentives could also come in the form of regulations that require adoption or provide a safe harbor to companies that adopt the protocol. Before we go too far down the road of developing new machine-readable privacy notices (whether comprehensive website notices like P3P, icon sets, notices for mobile applications, Do Not Track, or other anything else), it is essential to make sure adequate incentives will be put in place for them to be adopted, and that adequate enforcement mechanisms exist.

I have a lot more to say about the design decision made in the development of P3P, where some of the problems are, why P3P is ultimately failing users, and why future privacy standards are also unlikely to succeed unless they are enforced. In fact I wrote a 35-page paper on this topic that will published soon in the Journal on Telecommunications and High Technology Law. Some of what I wrote above was excerpted from this paper. If you are contemplating a new privacy policy/label/icon/tool effort, please read some history first. Here is the abstract:

Necessary But Not Sufficient: Standardized Mechanisms for Privacy Notice and Choice

For several decades, “notice and choice” have been key principles of information privacy protection. Conceptions of privacy that involve the notion of individual control require a mechanism for individuals to understand where and under what conditions their personal information may flow and to exercise control over that flow. Thus, the various sets of fair information practice principles and the privacy laws based on these principles include requirements for providing notice about data practices and allowing individuals to exercise control over those practices. Privacy policies and opt-out mechanisms have become the predominant tools of notice and choice. However, a consensus has emerged that privacy policies are poor mechanisms for communicating with individuals about privacy. With growing recognition that website privacy policies are failing consumers, numerous suggestions are emerging for technical mechanisms that would provide privacy notices in machine-readable form, allowing web browsers, mobile devices, and other tools to act on them automatically and distill them into simple icons for end users. Other proposals are focused on allowing users to signal to websites, through their web browsers, that they do not wish to be tracked. These proposals may at first seem like fresh ideas that allow us to move beyond impenetrable privacy policies as the primary mechanisms of notice and choice. However, in many ways, the conversations around these new proposals are reminiscent of those that took place in the 1990s that led to the development of the Platform for Privacy Preferences (“P3P”) standard and several privacy seal programs.

In this paper I first review the idea behind notice and choice and user empowerment as privacy protection mechanisms. Next I review lessons from the development and deployment of P3P as well as other efforts to empower users to protect their privacy. I begin with a brief introduction to P3P, and then discuss the privacy taxonomy associated with P3P. Next I discuss the notion of privacy nutrition labels and privacy icons and describe our demonstration of how P3P policies can be used to generate privacy nutrition labels automatically. I also discuss studies that examined the impact of salient privacy information on user behavior. Next I look at the problem of P3P policy adoption and enforcement. Then I discuss problems with recent self-regulatory programs and privacy tools in the online behavioral advertising space. Finally, I argue that while standardized notice mechanisms may be necessary to move beyond impenetrable privacy policies, to date they have failed users and they will continue to fail users unless they are accompanied by usable mechanisms for exercising meaningful choice and appropriate means of enforcement.